OSG Consortium

Advancing Open Science through High Throughput Computing

OSDF

The OSDF enables users and institutions to share data files and storage capacity.

Leveraging the OSDF, providers can make their datasets available to a wide variety of compute users, such as:

- Browsers

- Jupyter Notebooks

- HTC Environments, such as the OSPool

OSPool

No Proposal, No Allocation, No Cost

The OSPool provides its users with fair-share access to compute and storage capacity contributed by university campuses, and government-supported supercomputing institutions.

Sign up now and join the hundreds of researchers and educators already using the OSPool!

Learn More

Get connected with our community!

Through the use of high throughput computing, NRAO delivers one of the deepest radio images of space

The National Radio Astronomy Observatory’s collaboration with the NSF-funded PATh and Pelican projects leads to successfully imaged deep space.

News

Bioinformatics Café: Building Community and Bridging Computing and Biology

2026 CHTC Researcher Forum

HTCondor Withstands CERN’s Massive Stress Test

2025 HTCondor European Workshop Showcases Advances in High Throughput Computing

User Spotlights

What We Do

The OSG facilitates access to distributed high throughput computing for research in the US. The resources accessible through the OSG are contributed by the community, organized by the OSG, and governed by the OSG consortium. In the last 12 months, we have provided more than 1.2 billion CPU hours to researchers across a wide variety of projects.

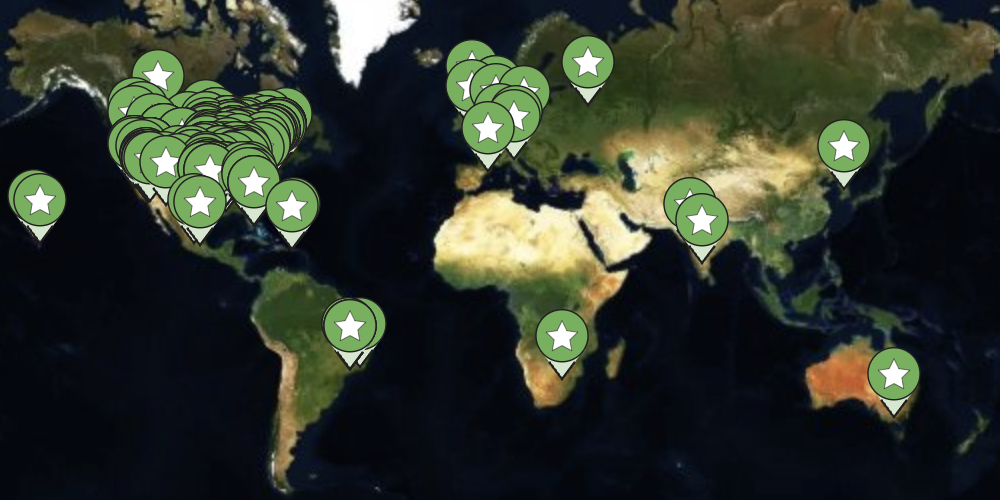

Submit Locally, Run Globally

Researchers can run jobs on OSG from their home institution or an OSG-Operated Access Point (available for US-based research and scholarship).

Sharing Is Key

Sharing is a core principle of the OSG. Over 100 million CPU hours delivered on the OSG in the past year were opportunistic, contributed by university campuses, government-supported supercomputing facilities and research collaborations. Sharing allows individual researchers to access larger computing resources and large organizations to keep their utilization high.

Resource Providers

The OSG consists of computing and storage elements at over 100 individual sites spanning the United States. These sites, primarily at universities and national labs, range in size from a few hundred to tens of thousands of CPU cores.

The OSG Software Stack

The OSG provides an integrated software stack to enable high throughput computing; visit our technical documents website for information.

Coordinating CI Services

NSF’s Blueprint for National Cyberinfrastructure Coordination Services lays out the need for coordination services to bring together the distributed elements of a national CI ecosystem. It highlights OSG as providing distributed high throughput computing services to the U.S. research community.

Find Us!

Are you a resource provider wanting to join our collaboration? Contact us: [email protected].

Are you a user wanting more computing resources? Check with your 'local' computing providers, or consider using an OSG-Operated Access Point which has access to the OSPool (available to US-based academic/govt/non-profit research projects).

For any other inquiries, reach us at: [email protected].

To see the breadth of the OSG impact, explore our accounting portal.

Support

The activities of the OSG Consortium are supported by multiple projects and in-kind contributions from members. Significant funding is provided through:

PATh

The Partnership to Advance Throughput Computing (PATh) is an NSF-funded (#2030508) project to address the needs of the rapidly growing community embracing Distributed High Throughput Computing (dHTC) technologies and services to advance their research.

IRIS-HEP

The Institute for Research and Innovation in Software for High Energy Physics (IRIS-HEP) is an NSF-funded (#1836650) software institute established to meet the software and computing challenges of the HL-LHC, through R&D for the software for acquiring, managing, processing and analyzing HL-LHC data.