Data Exercise 1.2: File Compression and Testing Resource Requirements¶

The objective of this exercise is to refresh yourself on HTCondor file transfer, to implement file compression, and to begin examining the memory and disk space used by your jobs in order to plan larger batches, which we'll tackle in later exercises today.

Setup¶

The executable we'll use in this exercise and later today is the same blastx executable from previous exercises.

-

Log in to

login05.osgconnect.net -

Change into the

blast-datafolder that you created in the previous exercise.

Review: HTCondor File Transfer¶

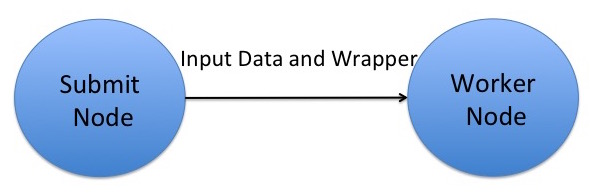

Recall that OSG does NOT have a shared filesystem!

Instead, HTCondor transfers your executable and input files (specified with the executable and

transfer_input_files submit file directives, respectively) to a working directory on the execute node, regardless of

how these files were arranged on the submit node.

In this exercise we'll use the same blastx example job that we used previously, but modify the submit file and test

how much memory and disk space it uses on the execute node.

Start with a test submit file¶

We've started a submit file for you, below, which you'll add to in the remaining steps.

executable =

transfer_input_files =

output = test.out

error = test.err

log = test.log

request_memory =

request_disk =

request_cpus = 1

requirements = (OSGVO_OS_STRING == "RHEL 7")

queue

Implement file compression¶

In our first blast job from the Software exercises (1.1), the database files in the pdbaa directory were all transferred, as is, but we

could instead transfer them as a single, compressed file using tar.

For this version of the job, let's compress our blast database files to send them to the submit node as a single

tar.gz file (otherwise known as a tarball), by following the below steps:

-

Change into the

pdbaadirectory and compress the database files into a single file calledpdbaa_files.tar.gzusing thetarcommand. Note that this file will be different from thepdbaa.tar.gzfile that you used earlier, because it will only contain thepdbaafiles, and not thepdbaadirectory, itself.)Remember, a typical command for creating a tar file is:

user@login05 $ tar -cvzf <COMPRESSED FILENAME> <LIST OF FILES OR DIRECTORIES>Replacing

<COMPRESSED FILENAME>with the name of the tarball that you would like to create and<LIST OF FILES OR DIRECTORIES>with a space-separated list of files and/or directories that you want inside pdbaa_files.tar.gz. Move the resulting tarball to theblast-datadirectory. -

Create a wrapper script that will first decompress the

pdbaa_files.tar.gzfile, and then run blast.Because this file will now be our

executablein the submit file, we'll also end up transferring theblastxexecutable withtransfer_input_files. In theblast-datadirectory, create a new file, calledblast_wrapper.sh, with the following contents:#!/bin/bash tar -xzvf pdbaa_files.tar.gz ./blastx -db pdbaa -query mouse.fa -out mouse.fa.result rm pdbaa.*Extra Files!

The last line removes the resulting database files that came from

pdbaa_files.tar.gz, as these files would otherwise be copied back to the submit server as perceived output since they're "new" files that HTCondor didn't transfer over as input.

List the executable and input files¶

Make sure to update the submit file with the following:

- Add the new

executable(the wrapper script you created above) - In

transfer_input_files, list theblastxbinary, thepdbaa_files.tar.gzfile, and the input query file.

Commas, commas everywhere!

Remember that transfer_input_files accepts a comma separated list of files, and that you need to list the full

location of the blastx executable (blastx).

There will be no arguments, since the arguments to the blastx command are now captured in the wrapper script.

Predict memory and disk requests from your data¶

Also, think about how much memory and disk to request for this job. It's good to start with values that are a little higher than you think a test job will need, but think about:

- How much memory

blastxwould use if it loaded all of the database files and the query input file into memory. - How much disk space will be necessary on the execute server for the executable, all input files, and all output files (hint: the log file only exists on the submit node).

- Whether you'd like to request some extra memory or disk space, just in case

Look at the log file for your blastx job from Software exercise (1.1), and compare the memory and disk "Usage" to what you predicted

from the files.

Make sure to update the submit file with more accurate memory and disk requests (you may still want to request slightly

more than the job actually used).

Run the test job¶

Once you have finished editing the submit file, go ahead and submit the job.

It should take a few minutes to complete, and then you can check to make sure that no unwanted files (especially the

pdbaa database files) were copied back at the end of the job.

Run a du -sh on the directory with this job's input.

How does it compare to the directory from Software exercise (1.1), and why?

Conclusions¶

In this exercise, you:

- Used your data requirements knowledge from the previous exercise to write a job.

- Executed the job on a remote worker node and took note of the data usage.

When you've completed the above, continue with the next exercise.