Requesting an OSG Hosted CE¶

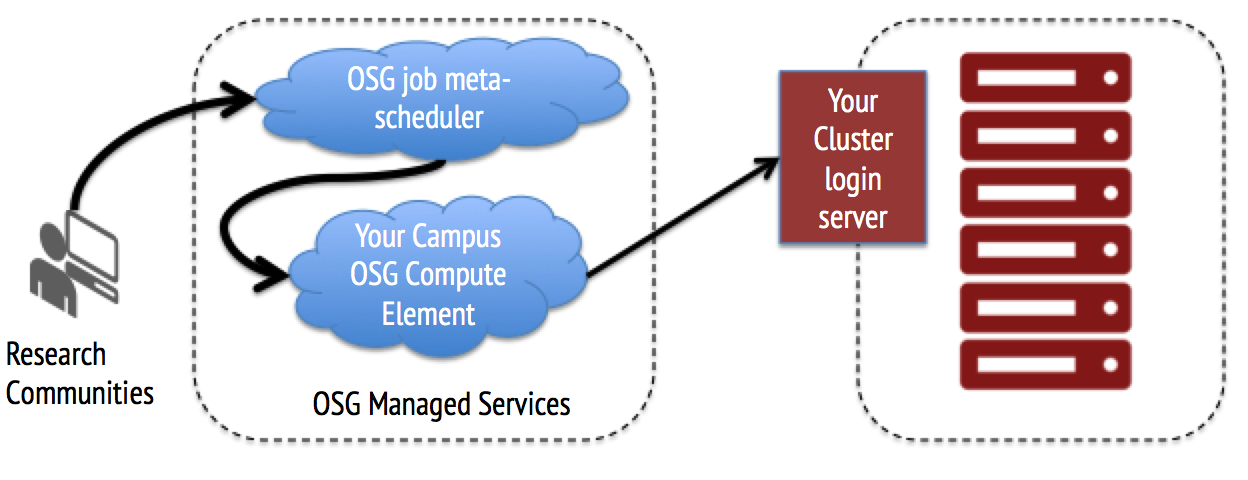

An OSG Hosted Compute Entrypoint (CE) is the entry point for resource requests coming from the OSG; it handles authorization and delegation of resource requests to your existing campus HPC/HTC cluster. Many sites set up their compute entrypoint locally.

As an alternative, OSG offers a no-cost Hosted CE option wherein the OSG team will host and operate the HTCondor Compute Entrypoint, and configure it for the communities that you choose to support.

This document explains the requirements and the procedure for requesting an OSG Hosted CE.

Running more than 10,000 resource requests

The Hosted CE can support thousands of concurrent resource request submissions. If you wish to run your own local compute entrypoint or expect to support more than 10,000 concurrently running OSG resource requests, see this page for installing the HTCondor-CE.

Before Starting¶

Before preparing your cluster for OSG resource requests, consider the following requirements:

- An existing compute cluster with a supported batch system running on a supported operating system

- Outbound network connectivity from the worker nodes (they can be behind NAT)

- One or more Unix accounts on your cluster's submit server with the following capabilities:

- Accessible via SSH key

- Use of SSH remote port forwarding (

AllowTcpForwarding yes) and SSH multiplexing (MaxSessions 10or greater) - Permission to submit jobs to your local cluster.

- Shared user home directories between the submit server and the worker nodes. Not required for HTCondor clusters: see this section for more details.

- Temporary scratch space on each worker node; site administrators should ensure that files in this directory are regularly cleaned out.

-

OSG resource contributors must inform the OSG of any relevant changes to their site.

Site downtimes

For an improved turnaround time regarding an outage or downtime at your site, contact us and include

downtimein the subject or body of the email.

For additional technical details, please consult the reference section below.

Don't meet the requirements?

If your site does not meet these conditions, please contact us to discuss your options for contributing to the OSG.

Scheduling a Planning Consultation¶

Before participating in the OSG, either as a computational resource contributor or consumer, we ask that you contact us to set up a consultation. During this consultation, OSG staff will introduce you and your team to the OSG and develop a plan to meet your resource contribution and/or research goals.

Preparing Your Local Cluster¶

After the consultation, ensure that your local cluster meets the requirements as outlined above. In particular, you should now know which accounts to create for the communities that you wish to serve at your cluster.

Also consider the size and number of jobs that the OSG should send to your site (e.g., number of cores, memory, GPUs, walltime) as well as their scheduling policy (e.g. preemptible backfill partitions).

Additionally, OSG staff may have directed you to follow installation instructions from one or more of the following sections:

(Recommended) Providing access to CVMFS¶

Maximize resource utilization; required for GPU support

Installing CVMFS on your cluster makes your resources more attractive to OSG user jobs! Additionally, if you plan to contribute GPUs to the OSG, installation of CVMFS is required.

Many users in the OSG make of use software modules and/or containers provided by their collaborations or by the OSG Research Facilitation team. In order to support these users without having to install specific software modules on your cluster, you may provide a distributed software repository system called CernVM File System (CVMFS).

In order to provide CVMFS at your site, you will need the following:

-

A cluster-wide Frontier Squid proxy service with at least 50GB of cache space; installation instructions for Frontier Squid are provided here.

-

A local CVMFS cache per worker node (10 GB minimum, 20 GB recommended)

After setting up the Frontier Squid proxy and worker node local caches, install CVMFS on each worker node.

(HTCondor clusters only) Installing the OSG Worker Node Client¶

Skip this section if you have CVMFS or shared home directories!

If you have CVMFS installed or shared home directories on your worker nodes, you can skip manual installation of the OSG Worker Node Client.

All OSG sites need to provide the OSG Worker Node Client on each worker node in their local cluster. This is normally handled by OSG staff for a Hosted CE but that requires shared home directories across the cluster.

However, for sites with an HTCondor batch system, often there is no shared filesystem set up. If you run an HTCondor site and it is easier to install and maintain the Worker Node Client on each worker node than to install CVMFS or maintain shared file system, you have the following options:

- Install the Worker Node Client from RPM

- Install the Worker Node Client from tarball

Requesting an OSG Hosted CE¶

After preparing your local cluster, apply for a Hosted CE by filling out the cluster integration questionnaire. Your answers will help our operators submit resource requests to your local cluster of the appropriate size and scale.

Cluster Integration Questionnaire

Can I change my answers at a later date?

Yes! If you want the OSG to change the size (i.e. CPU, RAM), type (e.g., GPU requests), or number of resource requests, contact us with the FQDN of your login host and the details of your changes.

Finalizing Installation¶

After applying for an OSG Hosted CE, our staff will contact you with the following information:

- IP ranges of OSG hosted services

- Public SSH key to be installed in the OSG accounts

Once this is done, OSG staff will work with you and your team to begin submitting resource requests to your site, first with some tests, then with a steady ramp-up to full production.

Validating contributions¶

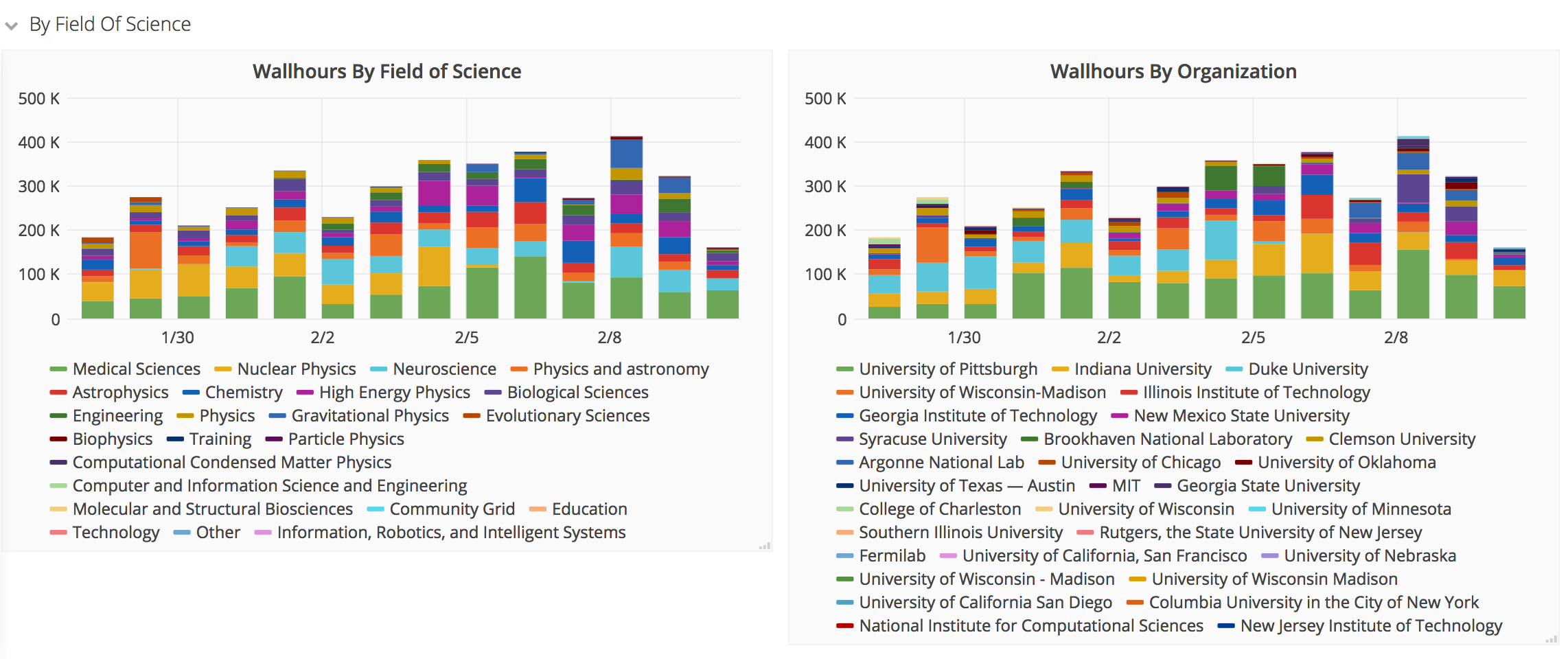

In addition to any internal validation processes that you may have, the OSG provides monitoring to view which communities and projects within said communities are accessing your site, their fields of science, and home institution. Below is an example of the monitoring views that will be available for your cluster.

To view your contributions, select your site from the Facility dropdown of the

Payload job summary dashboard.

Note that accounting data may take up to 24 hours to display.

Reference¶

User accounts¶

Each resource pool in the OSG Consortium that uses Hosted CEs is mapped to your site as a fixed, specific account;

we request the account names are of the form osg01 through osg20.

The mappings from Unix usernames to resource pools are as follows:

| Username | Pool | Supported Research |

|---|---|---|

| osg01 | OSPool | Projects (primarily single PI) supported directly by the OSG organization |

| osg02 | GLOW | Projects coming from the Center for High Throughput Computing at the University of Wisconsin-Madison |

| osg03 | HCC | Projects coming from the Holland Computing Center at the University of Nebraska–Lincoln |

| osg04 | CMS | High-energy physics experiment from the Large Hadron Collider at CERN |

| osg05 | Fermilab | Experiments from the Fermi National Accelerator Laboratory |

| osg07 | IGWN | Gravitational wave detection experiments |

| osg08 | IGWN | Gravitational wave detection experiments |

| osg09 | ATLAS | High-energy physics experiment from the Large Hadron Collider at CERN |

| osg10 | GlueX | Study of quark and gluon degrees of freedom in hadrons using high-energy photons |

| osg11 | DUNE | Experiment for neutrino science and proton decay studies |

| osg12 | IceCube | Research based on data from the IceCube neutrino detector |

| osg13 | XENON | Dark matter search experiment |

| osg14 | JLab | Experiments from the Thomas Jefferson National Accelerator Facility |

| osg15 - osg20 | - | Unassigned |

For example, the activities in your batch system corresponding to the user osg02 will always be associated with the

GLOW resource pool.

Security¶

OSG takes multiple precautions to maintain security and prevent unauthorized usage of resources:

- Access to the OSG system with SSH keys are restricted to the OSG staff maintaining them

- Users are carefully vetted before they are allowed to submit jobs to OSG

- Jobs running through OSG can be traced back to the user that submitted them

- Job submission can quickly be disabled if needed

- Our security team is readily contactable in case of an emergency: https://osg-htc.org/security/#reporting-a-security-incident

How to Get Help¶

Is your site not receiving jobs from an OSG Hosted CE? Consult our status page for Hosted CE outages.

If there isn't an outage, you need help with setup, or otherwise have questions, contact us.